2025-07-03 15:56:00

Backups are important! Remember kids, Jesus saves (and makes incremental backups)!

Jokes aside, having a solid backup of everything your company or life depends on is crucial. Don't rely on your computers always working the way they should and don't assume that your cloud provider makes backups of all your data.

Q.E.D: Microsoft cloud services, like MS365, OneDrive or Azure may offer highly available storage. They may even offer some additional backup services at a fee. But if for some forsake reason things go really wrong, you'll lose it all.

My companies both use Microsoft MS365 and Azure Active Directory (aka Entra ID). For now they rely upon those, it's their sole productivity base.

I've got the MS365 backups covered (including e-mail, OneDrive, Teams etc) using Synology's wonderful Active Backup software. At the time I'd bought a Synology 19" rackable system, which includes the full license for Active Backup for MS365. How awesome is that?! Buy a NAS, get a full cloud backup included!

Yes, I've tested the backups and restoration: the Active Backup tooling is wonderful!

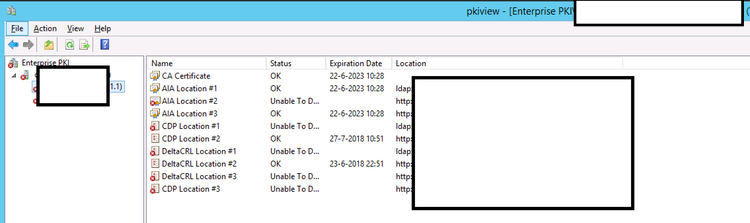

What it doesn't have, is backups for my IAM and RBAC user account administration, that is Entra ID. Unfortunately there's no Synology built-in solution for it either.

I did some investigation and there's quite a few companies offering SaaS solutions for Entra ID backups. Companies big and small, US and EU, affordable and expensive. Ironically, most of the smaller SaaS providers store your backups on Azure. :D

Of the SaaS providers, Keepit.com felt the best to me as they backup to their privately owned and built cloud environment in the EU. Ruud, from LazyAdmin, trusts Afi.ai which also looks decent.

Maybe I'm paranoid or overly careful, but it just doesn't sit right with me. I'm giving some third party full read-write access to my company's IAM and RBAC systems. If they get hacked, I'm fully pwned. I don't like it. Sure, all the big SaaS providers say they're trusted and used by big international companies! But... no I'm not doing it.

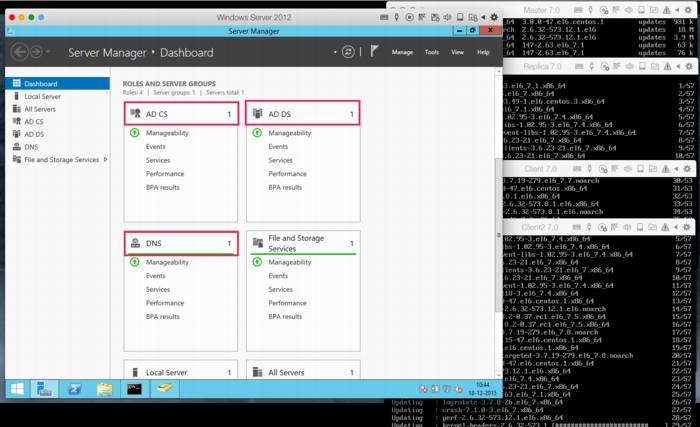

I chose to run my Entra ID backups on-prem, exactly like I'm running my MS365 backups on-prem. And there's one trusted company who offers that: Veeam.

Veeam Backup for Entra ID is offered both as SaaS solution, or as on-prem locally hosted software (deployment options here). Their messaging unfortunately is conflicting!

My experience:

There is only one thing remaining to complete my 3-2-1 backup strategy: off-site, offline storage, for both MS365 and Entra ID. And luckily my new Veeam backup server will help with that as well!

The SaaS services like Keepit.com, Afi.ai or Veeam's own service offer interesting pricing. While Keepit.com don't tell you their pricing, Afi want $36 per user per year and Veeam's ask is $14.10 pupy. Afi also includes MS365, which is of course a nice bargain.

If like me you want to run things on-prem, other costs need to be factored.

For anything Entra ID with less than 100 users, Veeam itself is free thanks to their very generous Community Edition. Of course you do need to run it on something. I've opted for Windows Server 2025 in a 4-core VM, which will set me back €233 per year (excl VAT).

For hardware I'm using a Dell Optiplex, which I got for around €590 (excl VAT). The Optiplex will run a few other VMs and containers as well, which means I get to spread the costs a little bit.

Would Veeam or Afi SaaS be cheaper in the long run? Yes. $420 per three years SaaS, vs around €900 ($1060) per three years in on-prem hard- and software.

So why do it?

For the learning experience and for my paranoia. :)

kilala.nl tags: work, sysadmin,

View or add comments (curr. 0)

2025-06-26 18:26:00

CertNexus are a vendor for professional certifications in the fields of IT, development and information security. I hold their CSC certifications (my CFR lapsed) and teach their CSC (cyber secure coder) class.

CSC-210 is a few years old, so they're now replacing it with the new CSD-110: cyber secure software developer. A few weeks ago they published the curriculum and exam blue print and this week they opened the beta-testing for the CSD-110 exam.

I have not had a chance to see their new training materials, which I assume they are still updating and making changes to. But today I sat the CSD-110 exam, to help them improve and test the new test.

In short: it was good!

I feel that these questions were well written! Some were a bit long to read, but overall the exam questions are not dry factual, but ask questions that require understanding and insight. The won't ask "what is the definition of phase X of the ATT&CK framework?", but more along the lines of "you observe X, Y and Z in your network and your team has determined that A and B. At which point in their kill chain are the attackers right now?".

With CompTIA beta exams I frequently leave large amounts of comments and feedback, pointing at bad wording or answers and questions that need improvement. With this exam I left very little feedback, meaning I really didn't see anything that wasn't good.

There was a decent diversity to the 80 questions I saw, although I would have liked to see more code and technical questions. It's called cyber secure software developer after all. But I assume that CertNexus has a large pool of questions and I simply got one particular mix of questions.

Having taken both CSC-210 and CSD-110 I will continue to stand behind this exam and training materials. I have now taught this class six times and every time the students come away feeling they learned a lot of useful new background. And as I said: I think this is honestly a good exam.

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2025-06-03 13:11:00

I've been a ludite on the subject of applied AI, such as LLM (large language models). Like cryptocoin and blockchain stuff, I've been avoiding the subject as I've been sceptical as to the actual value of the applied products.

Now I'm finally coming around and I've decided I need to learn how do these things work? Not as in "how can I use them?", but "what makes them tick?".

There's a few maths and programming YouTubers whose work I really appreciate, insofar that they've clarified for me how LLM work internally.

If you have no more than one hour, watch Grant Sanderson's talk where he visualizes the internal workings of LLM in general.

Then if you have days and days at your disposal:

I've learned a lot so far! I'm happily reassured that I haven't been lieing to students about the capabilities and impossibilities involving general purpose LLM and IT work.

3Blue1Brown's series on neural networks, starting with video 1 here, helped me really understand the underlying functions and concepts that show how "AI" currently works. The video series goes over the classical "recognition of handwritten numbers" example and explains in a series of videos what the neurons in a net do and how. And more importantly it clarifies why, to us humans and even the AI's creators, it's completely invisible WHY or HOW an AI comes to certain decissions. It's not transparent.

What got my rabbit holing started? A recent Computerphile video called "The forbidden AI technique". Chana Messinger goes over research by OpenAI that talks about their new LLM "deciding", or "obfuscating", or "lieing" and "getting penalized or rewarded" which had me completely confused.

Up until this week I saw LLM as purely statistical engines, looking for "the next most logical word to say". Which they indeed kind of are. But the anthropomorphization of the LLM is what got me so confused! What did they mean by reward or punishment? In retrospect, literally a trainer telling the LLM "this result was good, this result was bad". And what constitutes "lieing" or "obfuscation"? The LLM adjusting its weights, bias and parameters during training, so certain chains of words would no longer be given as output.

It's like Hagrid's "Shouldn't've said that, I should not have said that..." realization.

Now, to learn a lot more!

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2025-04-06 11:11:00

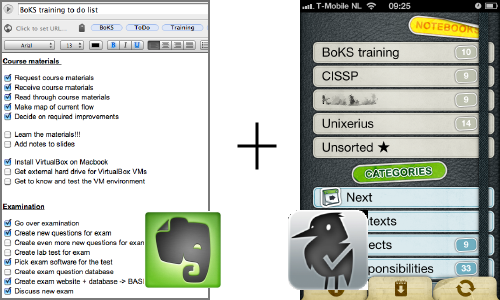

In Dutch we have an acronym SOG (studieontwijkend gedrag), which we have dutifully verbified into SOGgen.

Hanze Hogeschool even made a sketch about it.

In English it's transliterate into SAB and SAB-ing: studies avoidant behavior. But you all would better know it by its common name: procrastination.

Heck. I'm doing it right now!

In January I jumped on the wagon to work towards two heavy exams: CPTS and OSCP+.

January and February I went at it at the strongest pace I could hold up, but in March things started falling apart. Between three customers, preparations to teach three classes, our own household, studying and a terminally ill cat I was over-working myself.

Around that time I also hit the Active Directory section of the CPTS study materials. I feel that Hack The Box have made that section too large, insofar that they should have divided it into multiple sections. In its current shape it can feel insurmountable in how large the body of knowledge is. It just feels endless, where other sections had you power through in a day or two.

Halfway through March I decided to cut back drastically on studying. It took many nudges, including a tarot spread (yes, I'll talk about that another time). So I've taken quite some time for myself, to read and relax.

Admittedly, it's hard to get back into the saddle. And I really should. Just not at my original breakneck pace.

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2025-02-28 21:16:00

I was today-years-old when I realized something about SSH that I hadn't realized before.

A student of mine was using SSH to connect between two Linux hosts and he wondered if it's possible to temporarily pause or interrupt the SSH session, so he can run a few commands on the source / originating host.

I thought, surely there must be! And there is! I just never realized before. :)

Way way way back, twenty years ago, we used Cyclades terminal servers at ${Customer}. Nifty rackmounted boxes that hook up to the network and provide SSH access to 24 or more serial ports.

I remembered from back then that SSH had a command to immediately kill an SSH connection: ~.

The tilde being the stop / escape character for SSH and the dot being the kill command. You could also quickly type ~? in an SSH session to pull up a menu.

To answer my student's question, I hopped into my Fedora box from Windows with SSH and then did another SSH to Ubuntu. That's one SSH after connecting using another.

You can stack multiple tildes to indicate which SSH client you're talking to. Typing ~. kills the Windows to Fedora connection, while ~~. kills the Fedora to Ubuntu connection.

Looking at the ~? menu I noticed a few neat options, including ~^Z.

In Unix terminals, ^Z (ctrl Z) is used to send a suspend / SIGSTOP to your running process. So indeed, the following happened:

tess@ubuntu $ hostname

ubuntu

tess@ubuntu $ ~^Z

bash: suspended ssh

tess@fedora $ hostname

fedora

tess@fedora $ fg

tess@ubuntu

It works! :D

kilala.nl tags: work, mentor, studies,

View or add comments (curr. 0)

2025-02-23 10:23:00

I'm on various IT-learning Discords, to my own detriment sometimes, that's no secret.

On one of the servers, three or four of us experienced folks have been coaching one particular learner who's been on A+ 1101 for six months now. Along the way, the student has had a much lower pace than the average student and almost every topic leads to days-long discussions on intricacies or on misunderstandings of the topic.

It's to such a point that some of the new faces (whom join the server every week) utter things like "surely you're trolling" and "you can't be serious".

Among the seniors we've discussed the matter and we're sure this learner is not a troll. Instead there are a number of clues that point at either a learning disability, neurodivergence or simply a somewhat lower cognitive capability. These include:

Recognizing such indicators is one thing, knowing how to deal with them is another thing entirely. Unfortunately we're not quite equipped for it.

For one, each of us is just another visitor of the Discord server. We do this in our spare time, to help others and to have a little fun along the way. It's not within our capabilities to spend 4+ hours every day providing 1:1 coaching to this learner.

Sub-optimal factors for the learner:

I have theorized that the learner in question surely would be better served by attending a "real" school: brick & mortar buildings, full-on interaction between students and teachers, a teacher who can immediately notice that a student is struggling. Unfortunately, going to such a school is not always an option given factors like location, region, personal budget and their social situation or upbringing.

It's been an interesting journey.

Just today I've had to remind some of the others in the server that not every brain operates in the same fashion. Case in point:

View or add comments (curr. 0)

2025-02-12 19:52:00

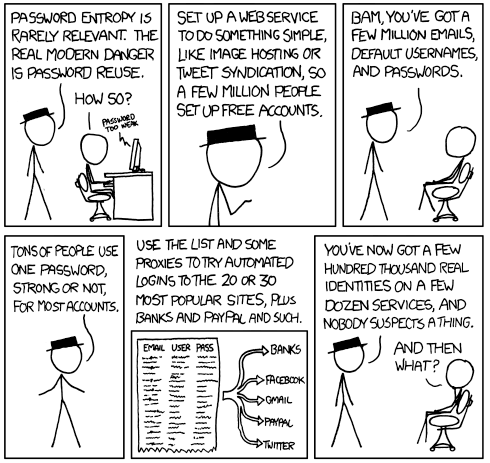

On the CompTIA Instructor's Network, Greg wondered whether DOGE (the newly minted NGO in the US) is actually a threat to national security. A lively discussion broke out, where Hank remarked:

"In this case, I am not sure how to discuss the technical issues without politics."

I suggested that we can discuss the issue, from the point of view of the aspects of infosec which we teach: Risk management. Threat modeling. Assumed breach. Access controls. Data destruction.

So here's a threat modeling exercise:

The case:

Question to the students:

Which security controls can we put in place to disrupt the threat actor's activities and to prevent or mitigate the threat actor's interests and activities?

View or add comments (curr. 0)

2025-01-20 20:59:00

A few days ago I was moping about how slow my laptops and other computers are too slow for password cracking. Someone tipped me about vast.ai, which offers GPU-workloads in the cloud.

It cost me $0.04 to rent fifteen minutes of time on someone's 4090. The actual cracking took less than a minute, the other fourteen were spent moving in my password list and the hashes.

This is great :D

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2025-01-06 15:37:00

Just a gentle reminder that you really shouldn't try to use Hashcat (the password cracker) in a virtual machine. Not even in UTM on aarch64.

Instead, install it on your host OS so it can properly make use of the GPU in your computer for accelerated cracking. On MacOS it's as simple as "brew install hashcat".

It's not super-fast on my M2 Macbook Air, I'll give you that.

Running: hashcat --username -m 7300 ipmi.txt -a 3 "?1?1?1?1?1?1?1?1" -1 "?d?u"

Hash.Mode........: 7300 (IPMI2 RAKP HMAC-SHA1)

Hash.Target......: 0d7bd5208204000049bc6aa3b42dabc39b36794995510217ff9...c8bbc7

Guess.Mask.......: ?1?1?1?1?1?1?1?1 [8]

Guess.Charset....: -1 ?d?u, -2 Undefined, -3 Undefined, -4 Undefined

Speed.#1.........: 134.2 MH/s (10.98ms) @ Accel:192 Loops:16 Thr:64 Vec:1

Hardware.Mon.#1..: Util:100%

EDIT:

I've reconsidered. I uninstalled the Homebrew version of Hashcat and built it from source. Running it now, it doesn't use Metal but OpenCL. Performance is somewhat better on my M2.

Hash.Mode........: 7300 (IPMI2 RAKP HMAC-SHA1)

Hash.Target......: 0d7bd5208204000049bc6aa3b42dabc39b36794995510217ff9...c8bbc7

Guess.Mask.......: ?1?1?1?1?1?1?1?1 [8]

Guess.Charset....: -1 ?d?u, -2 Undefined, -3 Undefined, -4 Undefined

Speed.#2.........: 157.7 MH/s (10.20ms) @ Accel:256 Loops:512 Thr:64 Vec:1

Hardware.Mon.#2..: Util:100%

kilala.nl tags: work, studies,

View or add comments (curr. 5)

2025-01-06 14:28:00

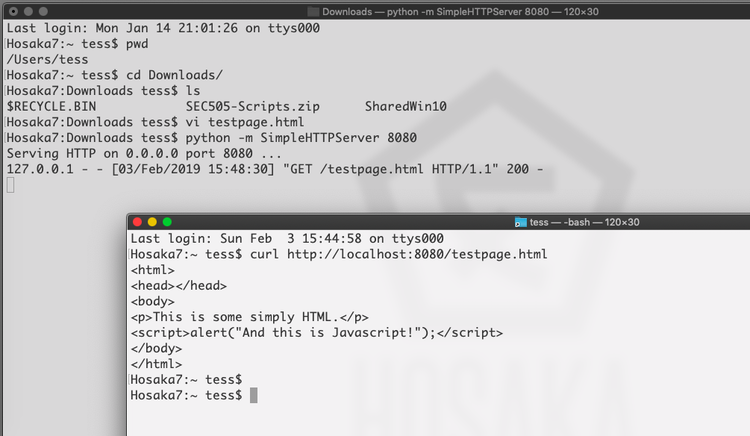

Hack The Box have a nice lab, where we're introduced to the basics of poking at the Oracle TNS service.

In this lab, they offer a set of commands to download and setup ODAT (Oracle Database Attacking Tool) on your Linux workstation. They assume you're working on Parrot OS, on x86_64.

Meanwhile, I'm working on Kali Linux, inside UTM, in MacOS on aarch64. The instructions are different. Here's what worked for me.

#!/bin/bash

sudo apt-get install libaio1t64 python3-dev alien -y

cd ~

git clone https://github.com/quentinhardy/odat.git

cd odat/

git submodule init

git submodule update

wget https://download.oracle.com/otn_software/linux/instantclient/instantclient-basic-linux-arm64.zip

unzip instantclient-basic-linux-arm64.zip

wget https://download.oracle.com/otn_software/linux/instantclient/instantclient-sqlplus-linux-arm64.zip

unzip instantclient-sqlplus-linux-arm64.zip

export LD_LIBRARY_PATH=$(pwd)/instantclient_19_25:$LD_LIBRARY_PATH

export PATH="$PATH:$(pwd)/instantclient_19_25"

echo "export LD_LIBRARY_PATH=\"$(pwd)/instantclient_19_25:\$LD_LIBRARY_PATH\"" >> ~/.bashrc

echo "export PATH=\"\$PATH:$(pwd)/instantclient_19_25\"" >> ~/.bashrc

sudo apt-get install -y python3-cx-oracle python3-scapy

sudo apt-get install -y python3-colorlog python3-termcolor python3-passlib python3-pycryptodome python3-pyinstaller python3-libnmap

sudo apt-get install -y build-essential libgmp-dev

Next to this, you will also need to make one small change to CVE_2012_3137.py in the ODAT directory. The import statement at the top (at least on my Kali box) needs to be changed to read: "from Cryptodome.Crypto import AES".

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2025-01-05 19:03:00

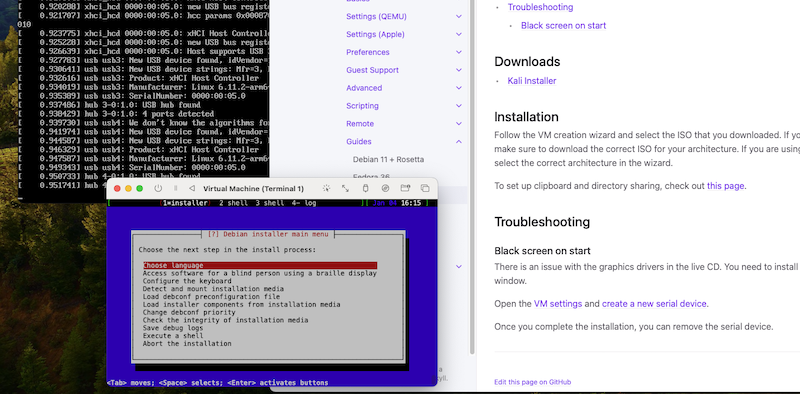

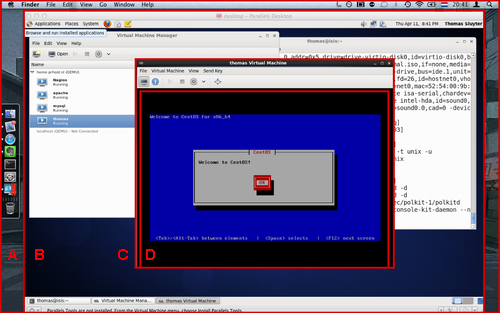

I know, I know, I'm not a fan of Kali Linux. But for the OSCP exam it's kind of required that you use it, so I thought I'd set it up on my Mac workstations. Both have an M1/M2 ARM processor, meaning I need to forego the usual VirtualBox + x86 install. Instead, I'm using UTM.

Luckily it's a perfectly viable setup, with a caveat.

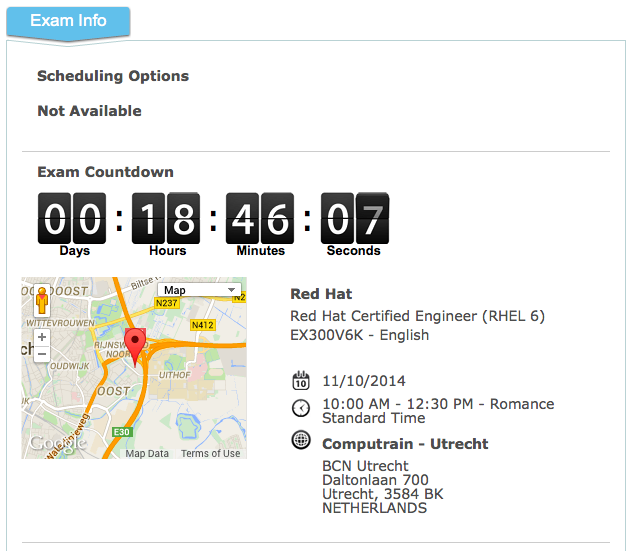

The installer will show a black screen, or if you run it in "expert mode" it'll look like the system got stuck booting. As per the screenshot above.

Turns out, it's running just fine, but the installer lacks the required drivers to make the UTM / Qemu video display work. The UTM documentation gives clear guidance: you need to enable a serial port on the VM, at least for the duration of the install. The installation TUI will be available on the serial port (also shown in the screenshot).

After that, everything works perfectly fine!

Except that cmd-tab-ing back and forth between UTM and other apps, keeps popping up the applications menu in XFCE. Aggravating!

Luckily, that's easily solved by going into the XFCE Settings Manager > Keyboard > Shortcuts and disabling the "Super L" shortcut for "xfce4-popup-whiskermenu".

Also: if you want to enable the sharing of files and if you want to have copy/paste between MacOS and Kali, you will need to install two additional packages. You'll need to install: spice-vdagent and spice-webdavd.

Also: if you'd like to have a permanent mount of that shared folder, via VirtFS, add this to /etc/fstab:

share /mnt/utm 9p trans=virtio,version=9p2000.L,rw,_netdev,nofail,auto 0 0

Full details here in the UTM docu.

kilala.nl tags: work,

View or add comments (curr. 0)

2025-01-02 13:02:00

Yes, it's official now: I am also LFCS certified. Not because I need it for my resumé, but because I want to be certified for every class/course I teach.

Yesterday I mentioned I took the LFCS exam. I'd been wanting to do it for a while now, out of professional interest, but I kept putting it off. Spurred on by December's success with LPIC-1, I decided to take the plunge.

It was fun. I truly enjoyed the LFCS exam and preparation.

As I mentioned in yesterday's review of the big four Linux sysadmin exams, the LFCS fee includes two exam takes, but also two practice exams! That's some great value!

Like with my CKA Kubernetes exam, the practice exams are arranged via Killer.sh. They offer excellent exam simulations, which work exactly like the real exams! I mean: the user interface and the process are the same. Of course the assignments are not. ;)

As many have said: the Killer.sh practice exams are actually harder than the real LFCS exam. On my first practice round I needed 90 minutes for 17 assignments. When I did the real exam, I only needed 60 mins for 17 tasks.

The exam environment is solid, the interface is good, the assignments/tasks are clear. I absolutely love that every task has its own VM/container! With RedHat's exams you get one system for all your tasks and if you break that system you outright fail the whole exam. That's not a risk you run with Linux Foundation! Great stuff.

I can heartily recommend this exam; it's my favourite of the four!

kilala.nl tags: studies, work,

View or add comments (curr. 0)

2025-01-02 05:06:00

On the tail end of 2024 I have finally achieved my goal of holding all four entry-level Linux system administration certifications. I set this goal so I can test-run all four exams for my students, to see which one's "the best".

Spoiler alert: there is no singular "the best".

I will be taking a look at the four big brand names: CompTIA, Linux Professional Institute, Linux Foundation and RedHat.

Exam type:

Exam format:

Exam time:

Exam costs (no training, only examination):

Current version:

Certification vendors are expected to provide continuous improvements to their exams. CompTIA is on a solid three year renewal cycle, where their Linux+ exam and objectives are completely refreshed. LPI on the other hand is really dragging things along, with an exam that's now over six years old.

Linux Foundation and RedHat frequently update their exams and their objectives, but don't offer much clarity about the content changes.

Exam objectives documentation:

CompTIA and LPI reign supreme when it comes down to publishing their exam objectives. They provide very clear documents, detailing exactly which topics, concepts, commands, etc will be covered in their exams.

LF and RH on the other hand offer short bullet point lists of one-sentence task descriptions which provide no guidance whatsoever as to what a student would need to learn or practice. With them, you will need to rely upon a training or book to give you guidance.

My opinion on the curriculum and exam contents:

I have compared the exam objectives for all four exams. You can read the full details in this blog post.

For Linux+ I did a beta-test of the upcoming version 6 and I'm not as happy about those objectives, compared to version 5. Here's my review.

Study materials:

All four vendors offer exams in test centers. The amount of available testing centers differs per region. I haven't done exams in test centers for years now, I always test remotely, so I'll only compare on that basis.

Remote testing software:

Remote testing ease-of-use and user-friendliness:

You will find so many people on Reddit and Discord who complain about, or fear, the OnVue remote testing for CompTIA exams. Horror stories about mean proctors, or bad software abound. I have now taken over twenty remote exams via OnVue and I have had four situations in which I could not start or finish the exam, three of which were my own fault. 1:20 failed because of the proctoring solution, 19:20 went fine.

The RedHat Kiosk solution is something I hate because the process of setting it up is abysmal. You have to make a bootable USB with their custom Kiosk OS which is known to have hardware compatibility issues, plus you have to have two webcams. Here's my experience from 2023.

The exam itself:

As far as I am aware, the Red Hat hands-on exam will give you two virtual machines and you do most of your work on one of them. The risk in this is huge: if you manage to break that one single machine, you will fail the exam outright!

I like Linux Foundation's approach a lot better: (almost) every assignment runs in its own virtual machine or container. If you break one assignment, all others will still be scored!

I disliked the LPIC exams, their questions were boring and dry. As usual I like how CompTIA write their multiple choice questions, but their PBQs generally range from "meh" to "awful".

This is a tough nut to crack, as return on investment will differ greatly per region/country. For example, CompTIA is a big brand name in the US but in EUW it may garner a "comp-who-now?".

Brand name recognition, by checking LinkedIn jobs that ask for this cert (in the Netherlands, as per today):

Oddly, some positions on LinkedIn ask for "LPIC2, RHCE or Linux+", suggesting they feel Linux+ is equivalent to higher level certs. Which it isn't.

Four job postings ask for "a Linux certification such as ...". I have not included those in the totals shown about, but you could consider that a +4 on each.

It's odd, but LFCE from Linux Foundation seems to be more well-known than LFCS, with 3 vs 1 job listings asking for it.

I can't tell you which Linux certification you should pick, most importantly because of that last paragraph: return on investment is heavily regional. You must always check your local job boards! See which certifications are, or are not, in demand in your area.

My personal view points?

It's clear that RedHat's certifications offer very big resumé value, as they are world-renowned. Everybody knows and respects them. The big downside is their price point, although they compensate this by offering one free retake since 2023. Before that, their proposition was awful, with every retake also costing €500.

I'm a fan of Linux Foundation and the work they do. Their exams are also excellent and I love their at-home testing solution; it just works.

LPI? I don't like. They feel stuffy and outdated. Done.

I like CompTIA's exams well enough, their curriculum is great, their price point is the best. It's unfortunate that Linux+ doesn't get the recognition it deserves. Because of that, I wrote in 2023: "CompTIA Linux+ is not worthless, it's just worth less".

It's ironic that the two exams/vendors I like best, are also the least well-known.

I feel CompTIA offers a better theoretical exam than LPI and I feel Linux Foundation's hands-on exam is much better than Redhat's. But the resumé value of both throws a spanner in the works. :(

Well-known author and fellow-trainer Sander van Vugt and I spoke on LinkedIn about this article. To quote him:

"...I do agree to your conclusions. RHCSA has a huge market value, LFCS is more interesting and more about Linux. Linux+ is important for NA customers, and LPIC-1, well, I dropped that about a decade ago. Their way of testing doesn't make sense to me."

kilala.nl tags: work, studies, mentor,

View or add comments (curr. 1)

2024-11-26 15:02:00

Ever since LinkedIn introduced their Verifications, they've been constantly pushing all their members to get verified because (of course!) other members will be more likely to trust you. Since the verification process generally involves using Persona to read your passport, a lot of people are flat out refusing to do so. Be it for privacy, be it for deadnaming or for other reasons, there's plenty of discussion about red flags.

Reading through LinkedIn's verification options, I noticed there's an alternative: employment verification, where your employer will confirm that you are indeed in their service. Interesting!

Since I am self-employed and I own an actual company, does that mean I can verify myself? Why yes, yes it does.

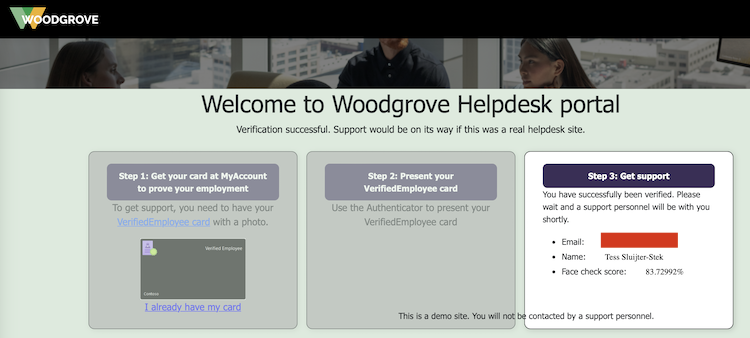

I did some reading and pieced together some documentation:

The process I followed is as such:

*: If you do not have a photograph of yourself setup under My Account, Face Check will fail. It will give you an error message like "No face detected in Verified ID. Use a different Verified ID with a better photo and try again.".

After setting myself up with a Verified ID, I used Microsoft's Woodgrove public test app. Here, I clicked the option that I have a Verified ID, which now gives me a QR to scan. I do so with the Azure MFA app, which prompts me if I indeed want to share my identity.

The Azure MFA app then starts the front-facing camera, makes a whole bunch of photographs and then uses Microsoft's AI to compare it to the photograph that's setup in my Verified ID. This is why I earlier ran into the "No face detected" error message: my account avatar was the Unixerius logo instead of my actual face.

And it works!

Next up: I have submitted a request to LinkedIn / Microsoft, as per the instructions detailed here. I hope that they will in fact enable Workplace Verification for Unixerius.

This has been an educational day!

kilala.nl tags: work, sysadmin,

View or add comments (curr. 0)

2024-11-08 14:39:00

Almost a year ago I had my first frustrating experience with the OnVue checkin process on my mobile phone. Today I learned a new aspect to this: the OnVue checkin process does not work on Apple iOS devices that have Lockdown Mode enabled.

Aside from that OnVue was great to work with, as always. The proctor was polite and efficient, I got my remote testing setup approved really quickly. The software worked fine, the checkin went well, we went over the rules quickly and I was allowed to start testing within 15 minutes.

I don't know what's up with people on Reddit, who complain about OnVue and proctoring. I have a head cold and I coughed and sneezed and snorted a lot during my exam. I had zero complaints from anyone!

As to the XK1-006 Linux+ beta exam: I'm not as enthused as I was about XK1-005.

I had 115 questions, 4 of which were PBQ. I needed a bit more than two of the three hours I'd been given. A lot of my time went into filling out comments, giving feedback to CompTIA. I just really hope they actually get and read all those comments, so that wasn't wasted time.

One thought struck me earlier today: I get the feeling that CompTIA are trying to shoehorn Linux+ into DevOps+ or something. They're adding on all kinds of stuff that doesn't belong on a junior Linux sysadmin exam and instead should be on an exam for more experienced people with a more diverse job role.

I think that, if CompTIA don't change the objectives to go more on-focus back to Linux, I'll suggest we switch to LFCS (or even LPIC) with my students.

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2024-11-07 13:12:00

Next year the Linux+ certification exams from CompTIA are due for their new version. 003 was the first one I ever did and we're now moving to 006!

As is tradition, I've made a comparison of the exam objectives:

Nov 8th'24 disclaimer: these comparisons were made using information available at the time. This information is subject to change, as CompTIA can and will tweak exam objectives. Always grab the latest objectives doc.

Disclaimer 2: My comparison does not go into details! It takes the high-level objectives and matches them. There will be a lot of small changes, most notably in commands that are, or are not, covered. Always study using the full objectives document!

The comparison also includes comparisons to LPI Linux Essentials, to LPIC1 and to RHCSA for good measure. All of this is very rough and not detail oriented; it just gives a broad overview of the differences.

The changes I've noticed, going from 005 to 006:

If anything I feel that this exam is trying to do too much.

When 005 introduced basic conceptual understanding of Kubernetes, Ansible and so on, next to in-depth container operations, I was happy. Just a glossing-over of the concepts, so students would understand what we use Linux for.

But now, the fact that those things have been given objectives of their own with extensive lists of terminology? I feel it's too much.

The addition of AI also just feels like CompTIA have a 2023-2025 mission to update every single exam to include AI/LLM.

So, either the curriculum for 006 trieds to do too much, or CompTIA say these are exam objectives while in reality just glossing over these topics anyway.

EDIT:

For those looking for learning resources, as always you're going to have to work with the current version's materials and then fill in the blanks. As per my comparison, the blanks are pretty considerable, so prepare to learn a lot.

In my class we use the Sybex book, which is decent and comes with practice questions and exams. But use whicever you like! McGraw-Hill and Pearson also have good books.

There are commercial video courses (though I've heard bad reviews of Dion's) and Shawn Powers has a free series on YouTube.

I share all my labs and practice exams here -> https://github.com/Unixerius/XK0-005/

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2024-10-12 22:09:00

I volunteer for Wiccon, a cybersecurity conference here in the Netherlands. Last year I gophered on-site and did a presentation on stage. This year I'm gophering again, I helped in the CFP (call for papers) and I'm in charge of the gopher-planning. I'd also submitted an abstract, which was ultimately not chosen.

A few days ago Chantal reached out to me, if I could maybe do my proposed presentation after all because another presenter became unavailable. After some thinking and puzzling I thought I could make it work. I had nothing but my abstract, but with 2.5 weeks remaining I could maybe make it work. Right?!

Well, it's caused me a lot of anxiety, to be honest! As I said, I had only the concept of what I wanted to present about, but not even a skeleton or a set of research. I'd not worked on that since my CFP submission was rejected.

This morning I reached out to Chantal and Dani to tell them I couldn't do it.

I'm preparing to teach four classes (DevSecOps in October, Linux+ in November and Linux Essentials and LPIC1 in December), I've got family matters and my primary customer. Shuffling priorities would free up some time, but going from zero-to-complete is simply not possible. I can't do it.

It's ironic that I would fall for this trap, even after telling Roald not a month ago that "I want too much, I'm too greedy".

It felt like I was letting down valued colleagues, friends even. I'd promised to help them, but I can't. If I did, my health and sanity would suffer, to the detriment of all other commitments I have. So I won't do it.

And it's okay. I'm telling myself that and so are they. It's okay if you can't do something. If I can't do it.

View or add comments (curr. 0)

2024-10-12 20:00:00

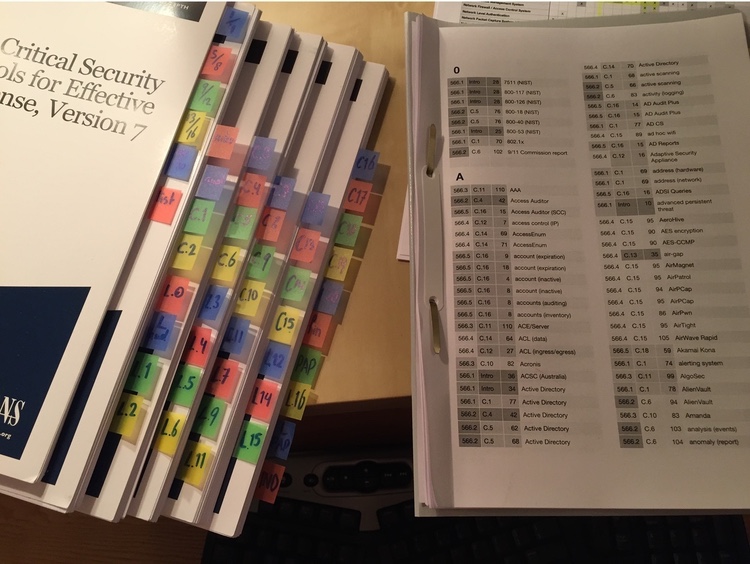

In 2019 I took a class with Russell Eubanks, SEC566 - Implementing the Critical Security Controls.

Lots of people associate SANS with "super-duper-technical" trainings, which SEC566 was not. It was more about understanding the many, many layers of security controls which an enterprise can (should?) apply to properly secure its assets. I learned a lot back then and the group discussions with fellow students were the biggest value-add.

Last week I participated in Russell's LDR521 - Security Culture for Leaders.

If you'd asked my if I'd see myself as a leader, even until a few months ago, I would've said "no". That's crazy, right? I'm just this gal, you know?

I always associated "leadership" with "management". Or even "higher management". But while I've been waxing introspective the past months, I realized that the past three to four years I have in fact been acting in a leadership role. As in: leading by example.

I've helped start two brand new DevSecOps teams, both having common goals:

Heck, a few years ago my team at the time got an in-house award, for leading security culture! So yeah. I guess I am in a leadership role now!

Which is why I applied for a SANS Facilitator role for LDR521, a security culture training developed by Russell and the famous Lance Spitzner.

There's literally no technological learning to this class, it's all about understanding business, management, finance, "selling" to your audience, training and more. All the things you need to understand, to pick apart existing culture, so you can affect change.

The two taglines for the class are on their challenge coin:

As is expected of SANS, it's "drinking from the firehose". There's an incredible amount of information to take in during the four days of class. While LDR521 doesn't have an exam of its own, you could say the fifth day itself acts somewhat as an examination! The capstone project has our teams tackle six challenges in improving security culture at the fictional family-owned Linden Insurance. It's hard work! Every challenge needs you to dig deep and remember the lessons you were taught in class. If not? Culture at Linden remains suboptimal or even suffers!

Coming from a highly technological background, the LDR-series of trainings requires that you drop your preconceptions about "what is right".

I for one hold strong opinions about the Right Course to sail and I have on multiple occassions been frustrated with management not understanding why my team was Right. I have an ingrained allergy to "the suits" and have had a disconnect between "mission, vision, strategy" and what we were doing in tech.

Well. This class helped break down walls which were already cracking.

Thanks to this class I have formalized things I have been doing the past five years. My teams were somewhat successful at guiding security culture, now I know there's actual words for and theory behind what we were doing. And yes, I am now starting to understand why aligining with "mission, vision, strategy" plays such a big role in culture. Heck, now I even know what this "culture" actually is! It's that iceberg-under-the-water, the "perceptions, attitudes and beliefs" that LDR521 so heavily features in its slides, them and challenge coin.

I very much would like to also do the other two classes in this leadership triad, LDR512 (security management essentials) and LDR514 (security planning and strategy). And once 521 gets an exam, I'll jump on it!

For now? My brain is mush. I need to deflate, reconnect with my loved ones after a week of absence and then I'll go over all the materials a second time. I need to solidify my understanding!

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2024-09-27 13:09:00

A new client has asked me to teach short sessions preparing trainees for the Linux Essentials and part of the LPIC1 / RHCSA exams.

Since I already teach Linux+, I thought I'd do a quick comparison of the exam objectives between the three big names. This comparison is only valid for the versions current per September 2024.

The PDF linked below has a number of columns which might not be self-evident. From left to right:

Rows marked "-" mean the objective is not on the mentioned exam. A red box marked "-" means the same, but also indicates that I feel it's something that should be on the exam. Or at least should be taught to a new Linux sysadmin.

LinuxPlus-LPIC1-RHCSA-ITVitae.pdf

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2024-09-13 14:24:00

Hot on the tail of last night's didactics training with Rick at Security Academy, I decided to immediately tackle one of my biggest pitfalls: I'm not an observant person, I take a lot of things at face value.

To help myself notice (and track) student behaviour in class, I whipped up a booklet with key behavourial patterns and Likert rankings. I'm sharing it under CC BY-NC-SA (meaning anyone's free to use and change it, but not for commercial purposes).

kilala.nl tags: work,

View or add comments (curr. 0)

2024-09-12 21:56:00

I love teaching. I very much enjoy helping people understand and apply new concepts.

Thus I'm very grateful for the opportunities I've been granted! I've taught in-house classes with my customers and I'm a returning instructor at ITVitae. I teach Linux, DevSecOps and security concepts, all with a lot of professional experience and a modicum of didactics. I got lucky this week, on the latter part!

But first: I'm looking to diversify my portfolio. I'm already a backup trainer for Firebrand and Logical Operations, but I'd like to actually teach more frequently! Which is why I've reached out to Security Academy, here in the Netherlands, earlier this year. At the time I had a great first meeting with Rick. Great insofar that we both saw opportunities and because that single hour held some educational nuggets of wisdom for me.

This week I had my try-out training session with them and let me start with my conclusion:

Even if I had not been hired, I would have still come out as a winner.

The try-out session had me prepare a 15-30 minute mini-class (which I did on zine-making). My audience consisted of three Security Academy colleagues and Rick observing as the fourth person present. What I didn't catch on to, was that the three colleagues were put into very specific roles: the enthusiast, the disruptor and the dead horse.

In just half an hour, they managed to find a whole bunch of my pitfalls and strong points! Some of my pitfalls I was already aware of, sometimes painfully so, others were novel.

Hence why I say: even if Rick decided not to move forward with me, I'd still taken a free masterclass!

Well, speaking of?! Rick invited me to take part in two masterclass sessions on didactics, with other Security Academy trainers. Those sessions? Awesome! The theoretical parts were a repeat and solidification of things I'd learned in my CTT+ certification. The practical session uncovered more pitfalls which I was not conciously aware of. I'm very grateful to have been part of this free masterclass!

Now... As I told my co-worker Roald: I have a tendency of biting off more than I can chew.

This is yet again evidenced, this time by me taking on a new customer! So, not only have I been accepted as trainer by Security Academy, I have also been hired (with two actually planned classes!) for teaching Linux to a group of trainees.

kilala.nl tags: work,

View or add comments (curr. 0)

2024-07-26 10:27:00

This morning on my way to work I listened to the latest episode of Open Source Security podcast. Their topic was very relevant to a past-intern Jana's master's research and to my current intern Cynthia's software project.

Specifically, episode 438: CISA's bad OSS advice, vs the White House's good advice

They made some very good points speaking against our team's ideas of doing risk analysis on open source dependencies. I really like it when smart people provide counterpoints to my own thoughts.

My teams and interns followed a classical approach, where we discern a number of key factors and metrics to determine whether a software dependency is "trustworthy".

I still like that we did these projects, because they can provide insight into our risk exposure. But I agree with the podcast's presenters that such a tool doesn't provide a solution to the problem.

They pointed to CISA's recent document about this very same problem, which they dubbed unhelpful. And they referred to Mitre's Hipcheck, which is another software solution for risk assessment on open source dependencies.

It all sounds like great materials to read into! It might even make for some interesting conclusions and counterpoints for our current intern's final report.

kilala.nl tags: work,

View or add comments (curr. 0)

2024-07-22 11:15:00

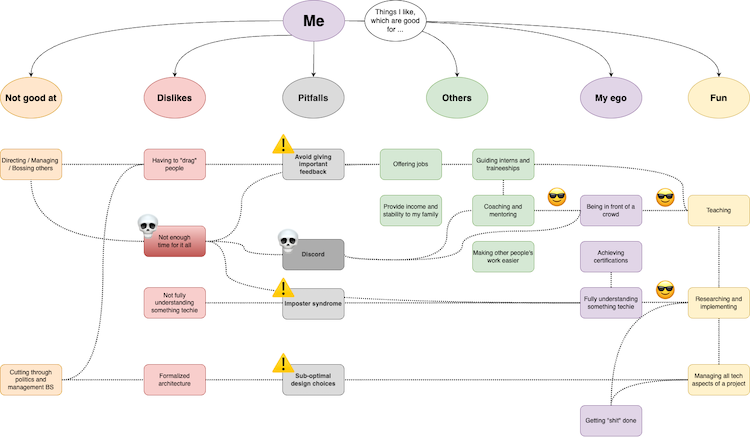

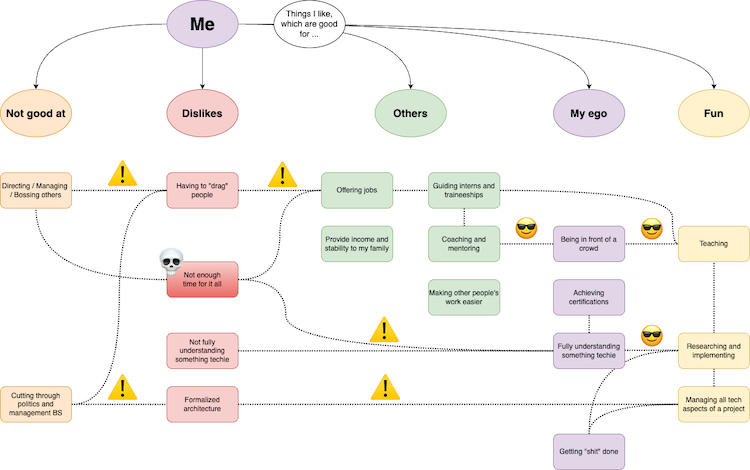

Here's a bigger version of the mindmap shown above.

After last week's introspection about my career, I've been doing a lot more thinking. I've put a lot of thought into which technical and non-technical skills I need to develop, to stay current in today's market. I'll talk about that another day.

I also realized that I left out one important thing in my mindmap: my personal pitfalls.

kilala.nl tags: work,

View or add comments (curr. 0)

2024-07-18 21:52:00

The past few days I’ve felt a bit stuck in my work, feeling the need for some change although I’m not quite sure what yet. My weekly routine has been quite that: routine.

Every week, I spend four days with my primary consulting customer and the fifth day I teach classes at ITVitae. For over a year I’ve been thinking how I could change that up, especially now that I’m self-employed and “a businessperson”. I don’t just get to run my own career, I have to!

I’ve been juggling all kinds of options.

After a rather big family event (one of our two cats passed away) I turned all the sourer and more introspective. I think I want something to change, but I’m not sure what.

So, I got to mind mapping and brainstorming. Thinking about things that give me energy and things that really eat energy from me. I put those into clouds of “things I like which help others”, “things I like which feed my ego”, “things I enjoy”, “things I dislike” and “things I’m not good at”.

Which resulted in the overview you see above (here’s a larger image). EDIT: Just to give more insight into my process, here's what the whiteboarding session ended up looking like.

I then looked at where those things either feed upon another, or where they clash.

For example:

So… Decisions!

This introspection has been useful!

I’m not done yet though. I need to rethink my planned learning path, to make sure I’m still investing time in the right things.

kilala.nl tags: work,

View or add comments (curr. 0)

2024-06-09 14:18:00

It's been a busy weekend! After spending yesterday at AnimeCon, today I focused on household and on another CompTIA beta exam. About a month ago I wrote about the betas for Pentest+ and SecurityX, today I did Pentest+ PT1-003.

The PT1-003 objectives are available here.

Here's my thoughts on the exam:

In short: I think it's good! At least as good as the SecurityX beta, maybe even better. And much better than the Cloud+ beta which was kinda bad.

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2024-05-20 19:47:00

This morning I woke way too early to take CompTIA's CA1-005 SecurityX beta.

118 questions and I used two hours (out of the three allotted). I thought the invite said four hours max, but okay, fine. I thought the MC questions were mostly pretty good, only a very small amount of stinkers. The PBQ weren't that exciting though, could've been more.

One thing that stands out: CAS-004 was the first CompTIA exam to introduce a PBQ in a real Linux virtual machine. CA1-005 has removed all of the Linux commands from the objectives, which suggests that CompTIA decided to kill that particular subject and the VM PBQ. I for one did not encounter the VM at all.

All in all, is the CASP+ / SecurityX a competent, more technical alternative to CISSP? I think it's not far off! Now the problem to tackle is brandname recognition.

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2024-05-10 17:12:00

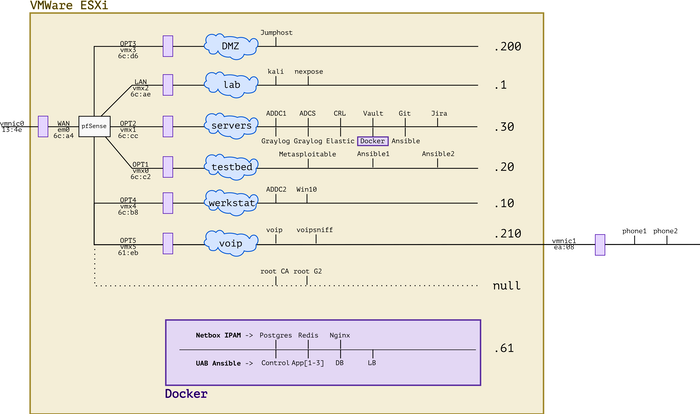

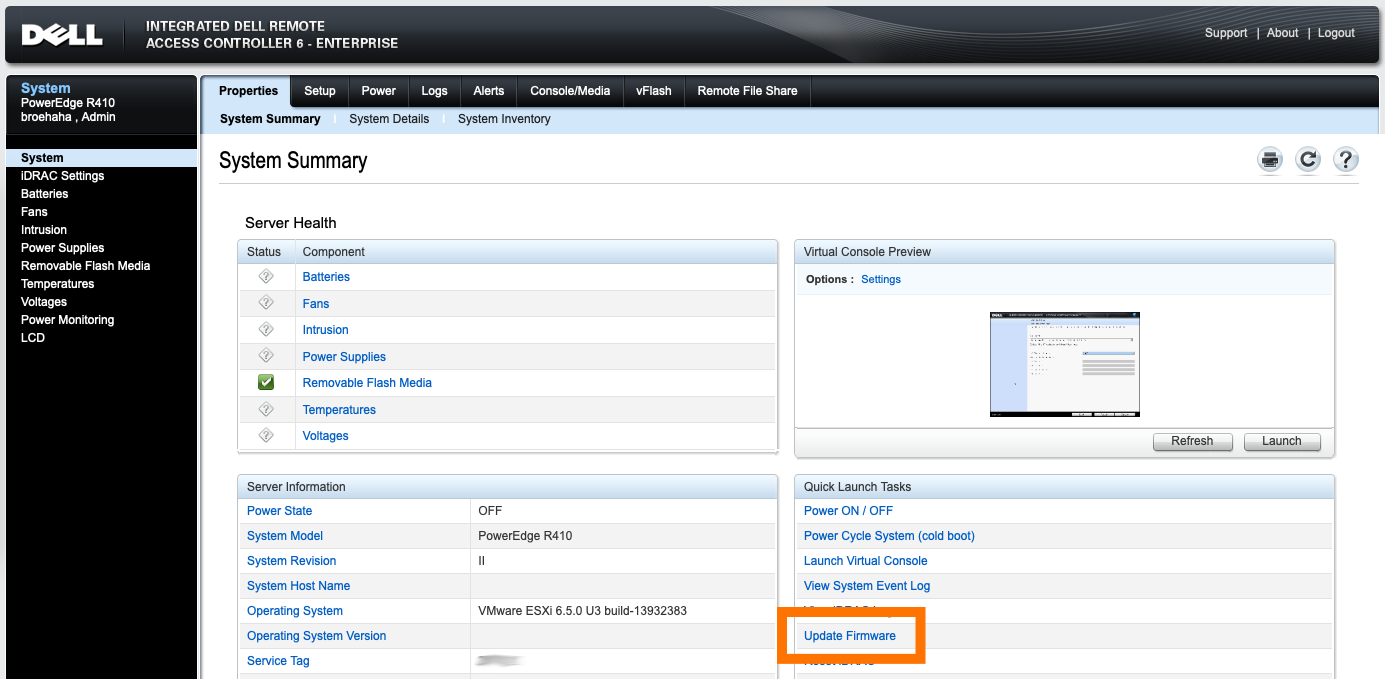

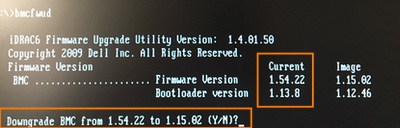

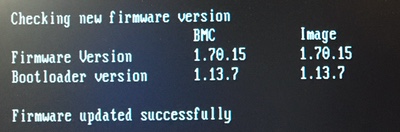

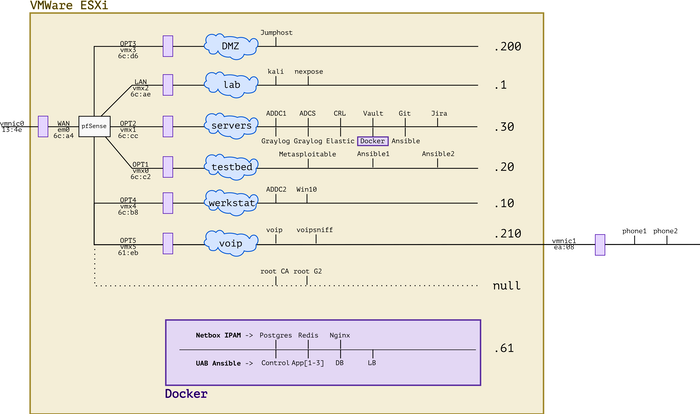

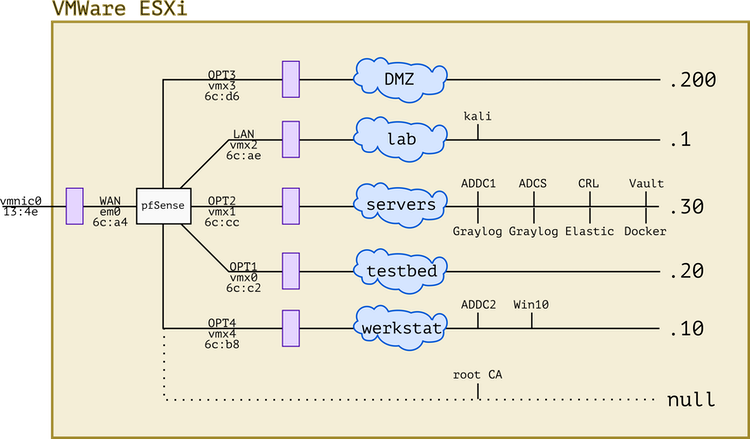

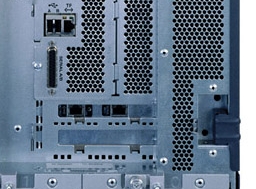

It's been almost a year since I last fired up my homelab. I haven't had a need for the 20+ VMs since I did my Ansible and CDP exams as just about all the other exams I prepared on a smaller, local env.

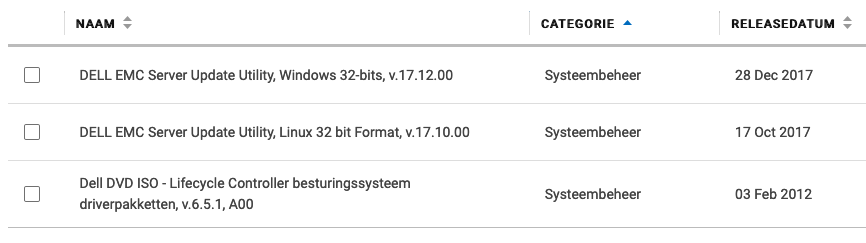

A few weeks back I decided to fire up my R710 again, to see if everything still works. It's antiquated and it runs a version of VMWare ESXi 6.5.x. Since its boot drive is a USB flash drive, I was a bit worried.

Lo and behold, I am greeted by a pink/purple screen that says:

failed to mount boot tardisk

Whelp... I have some inclination what that means and I don't like it. Unfortunately the Internet also wasn't of much help, as that exact error appeared once on a German forum.

After some messing about, I'm happy to learn that my USB boot drive still had a recovery option! Pressing <shift><r> when told to, pops me into recovery mode. It tells me I can restore a previous install (which curiously had the exact same OS version), which I did.

By the sounds of it, all my VMs are booting again. :)

Now to make a backup of that flash drive!

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2024-02-02 07:28:00

In 2020 I took the CV1-003 CompTIA Cloud+ beta. Back then I wasn't really impressed with the quality of the exam. Well, it's time for the next version!

A few weeks ago I took CV1-004 for $50, to see if it's better than last time. Yes, but no.

The questions on the new beta were more diverse than last time. And I still like the exam objectives / curriculum. But in general, I wasn't a fan of the exam questions. I know CompTIA often has questions where you're not supposed to think from real-life experience, but this time around it's really pretty bad. Know that meme of grandma yelling "that's not how any of this works!". Well that was me.

Especially the PBQs felt like CompTIA were struggling to come up with something that works. And if I have to see one more white-clouds-on-blue-sky stock photo I'll scream.

Jill West, an instructor on CIN, wrote it pretty eloquently:

"That was a bizarre exam. Only one of the PBQs really seemed appropriate to the test [...] Some other questions seemed like someone was looking at the objectives to write their questions but didn't really understand the concepts; they just used several items from the objectives as "wrong" answers when those options really weren't congruent with each other [...]"

So yeah. If there's a student interested in learning about cloud computing, I would suggest the read the materials, but I wouldn't suggest they'd take the exam.

===

After passing PDSO's CASP API security exam, I thought I'd look at some of their competition. I'm still going through APISec University's courses (which seem good), but I also gave their CASA exam a quick shot.

In short: I will definitely recommend their training materials to students, but not the CASA. CASA is:

Points 2, 3 and 4 unfortunately mean that, from an employer's point of view, the certification isn't worth much because there's no guarantee that whomever has it didn't cheat in some way. Basically my biggest critique of PDSO's exams as well (which has points 2 and 3, but not 4).

The questions on the test were well written, so that's something. They are a decent way for someone who's taken the APISecU classes to test themselves. And the potential employers will simply need to do better BS-testing in interviews. :)

kilala.nl tags: work,

View or add comments (curr. 0)

2024-01-21 15:21:00

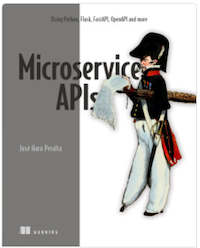

In the months leading up to my PDSO CASP studies I read José Haro Peralto's "Microservice APIs". On and off, between classes and between other things I was learning. It's been a long read, but I can heartily recommend it.

I can honestly say that José's excellent book is what taught me the most I now know about how APIs work! And it most certainly made a lot of things clear, which I also learned about in CASP.

Before I read "Microservice APIs" I had a foundational grasp of how REST and SOAP APIs look from the outside, as consumer. I'd used OpenAPI specs, I'd read through WSDL files and I'd made API calls through HTTP. But I never really understood how it all worked on the server side.

José's book makes all of that server side magic crystal clear!

The book explains foundational and deep technical aspects of building multiple interacting APIs, which together form the backend of an online coffee product shop. And José shows all of it! All the Python code to load the frameworks, to write the queries and to build the endpoints. All of the code needed for GraphQL and two different REST implementations. And even a bit of authentication and authorization! Heck, appendix C of the book turns out to have exactly what I was looking for when I wanted to learn about integrating OIDC and OAuth into the authorization checks of an API!

If you hadn't guessed yet: "A+ would recommend".

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2023-12-01 20:11:00

A few weeks ago my company become official Delivery Partner of CompTIA's, which means that I can now officially also teach classes on their behalf. I've already taught Linux+ for a few years, at ITVitae, but that's using my own materials and the Bresnahan/Blum book.

One other benefit to this partner status, is that we can purchase exam vouchers at a 20% discount. In this, I see the opportunity to help struggling newbies who want to break into IT, even if it's just a little.

In my life, I've was helped by a great number of people and thus I firmly believe in "lifting up" and in "paying it forward". If I can take a small financial hit, in order to help people take their exams at a cheaper rate, I'll gladly do it.

Having no prior experience in running a webshop (aside from a few internship projects 25 years ago!), I looked for the nicest-yet-low-barrier solution.

The Unixerius site is built using Rapidweaver, a MacOS WYSIWYG editor which has made it very easy to quickly whip up a decent looking site. I spent about an hour research options of affordable webshops, only to be happily surprised by Ecwid.

Ecwid are a webshop SaaS provider who offer a full frontend + backend system. They integrate with the payment providers I would need for the European market (Paypal, SEPA and Stripe, which offers iDeal). Their management system is excellent. And their frontend nativel integrates with Rapidweaver.

It took me roughly three hours to set everything up, from A to Z. And it all works very well, I was my own first customer by test-purchasing an ITF+ voucher.

I will not be doing any big marketing for this shop. It's intended to be a small way to help out struggling students. I'm not looking to piss off the big CompTIA partners by severly undercutting them on large amounts of sales.

Heck, I'm restricting voucher purchases to one-per-person, to prevent pissing off CompTIA themselves. :)

kilala.nl tags: work,

View or add comments (curr. 0)

2023-11-01 11:48:00

This was so much fun!

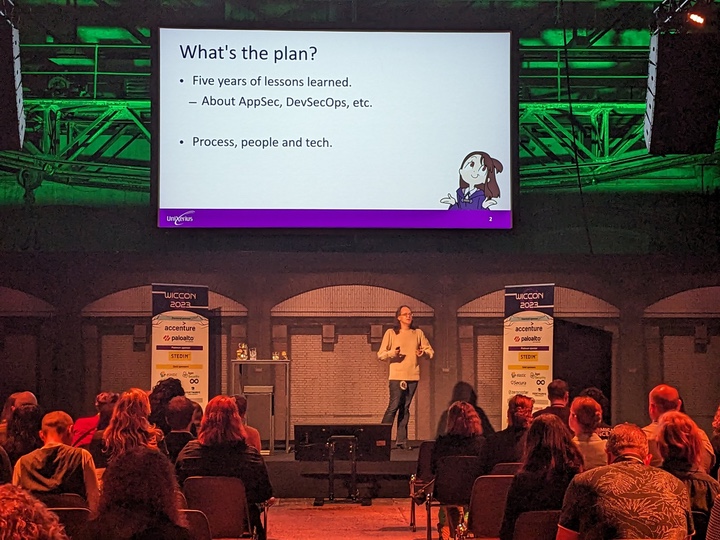

WICCON is an IT conference by women, for everybody, featuring a full cast of just women presenting about their work! Next to volunteering in the Black Cat Society, I also submitted a CFP. My talk was accepted. :)

You can view all presentations on WICCON's Youtube channel.

kilala.nl tags: work,

View or add comments (curr. 0)

2023-07-17 20:18:00

I've held off on spending money on a new Mac for a long, long time. I have two Macbook Airs from 2017, which are still holding up admirably for my studies and work. Honestly, their 8GB of RAM and aged i5 are still plenty good for most of my work.

Sure, I did get an Asus laptop with a beefy Ryzen in there, for teaching purposes. But even that's an ultra-portable and nothing hugely expensive.

I've had to bite the bullet though: the chances of me getting students with Apple Silicon laptops are growing. My current group at ITVitae has my first one and it's a matter of time before a commercial customer pops in with an M1 or M2.

So, I got myself a second hand 2020 M1 Mac Mini from Mac Voor Minder. Good store, I'd highly recommend.

I had hoped that, in the three years we've had the Apple Silicon systems out, virtualization would be a solved problem. Well... it's not really, if you want one of the big names.

VirtualBox, forget about that. It's highly in beta and is useless. VMWare Fusion supposedly works, but I didn't manage to get it to do anything for me. And I'm not paying for Parallels, because most likely my students won't either! I need cheap/free solutions.

Turns out there's two.

I'm now rewriting the lab files for my classes, to make them work on M1/M2 ARM systems. I'm starting with the lab VM for my DevSecOps class and then moving onward to two small projects that I use in class. Updating my Linux+ class will take more work.

Maybe I should start making my own Vagrant box images. :)

kilala.nl tags: work, studies,

View or add comments (curr. 1)

2023-04-21 18:37:00

It's no secret that I use Ubiquiti equipment for my networking. My office runs on a UDM Pro, which has been great for me.

The UDM Pro performs well and stable, it has a great feature set and it's easy to manage (for someone who wants to spend little time managing their network). Heck, even site-to-site VPN for my security cameras was simple!

My main WAN connection comes from MAC3Park, my housing company. They recently had an outage on my Internet connection, which lasted a few days. That messes with my backups and a few of my business processes, so I want to have at least some form of alternative in place.

Luckily, the UDM Pro also makes it dead simple to configure automatic failover or even load balancing across two WAN connections! It really is amazingly simple! Or it should be, as we'll see in a bit.

As a second Internet connection, I looked into getting 4G/5G from my mobile provider. Ubiquiti have their own LTE/4G/5G solution, which looks awesome but is a bit expensive. For half the price, I got a Teltonika RUT241 aimed at IoT solutions.

Sure, the LAN port on the RUT241 is slower (10/100Mbit), but seeing how the 4G connection averages around 20MBit that'll be fine. That's also where the "should be simple" I mentioned earlier comes in.

The RUT241 worked great with my laptop, but hooking it up to the SFP RJ45-module on the UDM Pro it just wouldn't go. No amount of changing settings would make it work. Very odd! There was no DHCP lease and even a statically assigned IP wouldn't let me connect to the Teltonika.

Turns out that, upon closer inspection, my vendor sent me the wrong SFP module :) I'd ordered the 1G model (which does 10/100/1000), but they sent me the 2.5G (which does 1000/2500/10000). The latter will not work with the Teltonika.

Time to get that SFP replaced by my vendor and we'll be good to go!

EDIT:

Or even better! I could just switch my cabled connection from MAC3Park (which is 1G) to port 10 and switch the Teltonika to port 9 (which natively does 100/1000). So basically, switch the definitions of WAN1 and WAN2 around!

EDIT2:

That worked.

I made port 9 WAN2 and port 10 WAN1. I switched the cables around and now port 9 happily runs at 100Mbit, connected to the Teltonika.

Even nicer: in bridge mode, port 9 gets the 4G IP address so it's perfectly accessible as intended. But in that same bridge mode, the RUT241 remains accessible on its static, private IP as well so you can still access the admin web interface.

So if, for example, my internal LANs are 10.0.10.0/24 and the Teltonik's private IP is 10.0.200.1, I've setup a traffic management route which says that 10.0.200.0/24 is accessible via WAN2. That way I can manage the Teltonika web interface, from inside my office LAN, even when it's in bridge mode. Excellent!

EDIT3:

I tested the setup!

Setting the UDM Pro to failover between the connections works very well. Within 60 seconds, Internet-connectivity was restored. It does seem that the dynamic DNS setup does not quickly switch over, so a site-to-site VPN will fail for a lot longer.

Setting the UDM Pro to load balancing didn't work so well. The connection remained down after I pulled WAN1.

kilala.nl tags: work, sysadmin,

View or add comments (curr. 0)

2023-04-19 11:29:00

This month, I've put some time into formalizing my experience with the ISO 27001 standard for "Information Security Management Systems". That is, the business processes and security controls which an organization needs to have in place to be accredited as "ISO27001 certified"... which translates into: this organization has put the right things into place to identify, address and manage risk and to provide personnel and management with policies, standards and guidelines on how to securely operate their IT environment.

It's a cliché that people in IT have a distaste for "auditing" and "compliance". And sure, I've never had much fun with it either! But I felt I was doing myself a disservice by not formalizing what I've learned over the past decades. Or to put it the other way around: making sure I properly learn the fundamentals, means that I can assist my customers better in properly structuring their IT security.

So off I went, to my favored vendor of InfoSec trainings: TSTC in Veenendaal. :)

They provide the PECB version of the ISO27001 LI training and examination. The PECB materials aren't awesome, but they get the job done. And yes, if you're a hands-on techie, then the material can be rather dreary. But overall I had a fun four days at TSTC, with a great class and a solid trainer.

The exam experience was a bit different from what I'm used to with other vendors.

TLDR, in short:

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2023-03-24 10:27:00

About a month ago I re-sat CompTIA's Linux+ exam, to make sure I am still preparing my students properly for their own exams. I still like the Linux+ exam (which I first beta-tested in 2021) and I'm happy to say that my course's curriculum properly covers all "my kids" need to know.

This week I sat not one, but two exams. That makes four this year, so far. :D

Why the sudden rush, with two exams in a week? I'm applying as CertNexus Authorized Instructor, through an acceleration programme that CN are running. They invited professional trainer to prepare and take their exams for free, so CN can expand their pool of international trainers.

I feel that's absolutely marvelous. What a great opportunity! I heartily applaud CertNexus for this step.

The first exam which I took was CSC-210: Cyber Secure Coder. The curriculum had a nice overlap with the secure coding / app hacking classes that our team taught at ${Customer}, which means it's a class I would feel comfortable teaching. It's not programming per sé, it's about having a properly secure design and way-of-work in building your software. The curriculum is language agnostic, though the example projects are mostly in Python and NodeJS.

I went through the official book for CSC and I like the quality. I actually enjoyed it a lot more than CompTIA's style. I haven't gone through the slide decks yet, so I can't say anything about those yet. The exam, I really liked. The questions often tested for insight and when it asked to define certain concepts, it wasn't just dry regurgitation.

I can definitely recommend CertNexus CSC to anyone who needs an entry-level training and/or certification for secure development.

Now, CFR-410 (CyberSec First Responder) is a different beast. I took the beta back in 2021 and at the time I was not overly impressed. The exam has stayed the same: it still asks about outdated concepts and it still has dry fact-regurgitation questions.

I haven't gone through the book and slides yet, I'll do that this weekend so I can update this post.

I have contact CertNexus to offer them feedback and help, so we can improve CFR. Simply complaining about it won't help anyone, I'd rather help them improve their product.

EDIT: CertNexus have indicated they will welcome any feedback I can provide them for CFR, so that's ace. I will work with them in the coming weeks.

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2023-03-18 19:30:00

On Discord, people frequently ask whether "is Linux+ worth it?". Here's my take.

The value depends on your market and on what you get out of it. In the US and UK, CompTIA is a well-known vendor but in other parts of the world they aren't. But left or right, Linux+ is not very well known.

I teach at a local school to prep young adults for the Linux+ exam. The school chose Linux+ because they can get heavily discounted vouchers for the exams, versus LPI, LF and others. For the school it was a matter of money: they really don't have much money and every dollar helps.

Personally, I feel that the Linux+ curriculum is pretty solid as far as Linux sysadmin certs go. The exam itself is also decent and the vendor is mature.

So in this case the value you'll get is from learning Linux system administration pretty in-depth. You'll also get a slip of paper which some might recognize and others will go "*cool, you passed a cert exam, good job*" (in a positivie sense).

Linux+ is not worthless, it's just worth less (when compared to LFCS, LPIC1 and RHCSA).

kilala.nl tags: studies, work,

View or add comments (curr. 0)

2023-03-04 08:20:00

Someone on Discord asked: "Question: Does DevSecOps type of work fall under ISSO's roles and responsibilities?"

That got me thinking.

IMO: DevSecOps, like many things in InfoSec, is something everybody needs to get in on!

Architects need to define reference designs and standards. The ISO needs to define requirements based on regulations and laws and industry standards. An AppSec team needs to provide the tooling. Another team needs to provide CI/CD pipeline integration for these tools. And yes, the devops squads themselves need to actually do stuff with all of the aforementioned things. Someone needs to provides trainings, someone needs to be doing vulnerability management. Etc.

One book on the subject which I heartily recommend, is the Application Security Program Handbook, by Derek Fisher.

I bought that book right after leaving my previous AppSec role, where we spent two years building an AppSec team that did a lot of things from that list. I was amazed by the book, because cover to cover it's everything we self-taught over those two years.

kilala.nl tags: work,

View or add comments (curr. 0)

2023-01-16 07:20:00

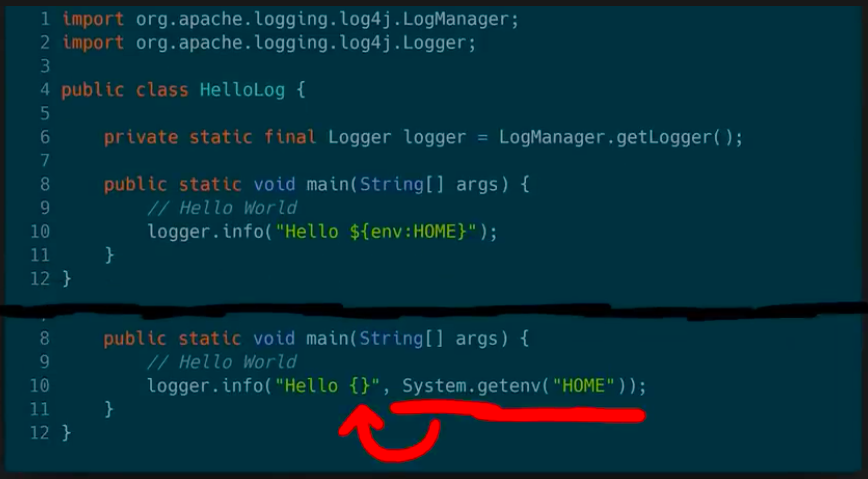

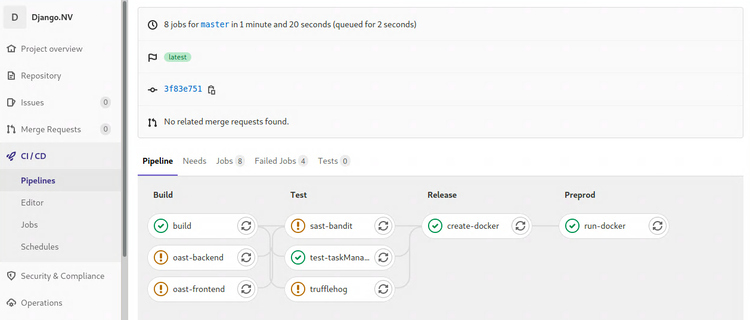

In early 2021 I needed to learn about DevSecOps and CI/CD and I needed it fast. A crash course if you will, into all things automation, pipelines, SAST, SCA, DAST and more. I went with PDSO's Certified DevSecOps Professional course, which included a 12h hands-on exam.

Here's my review from back then, TLDR: I learned a huge amount, their labs were great, their videos are good, their PDF was really not to my liking.

Since then I've worked with a great team of people, team Strongbow at ${Bank}, and we've taught over a thousand engineers about PKI, about pentesting, about API security and about threat modelling. So when PDSO introduced their CTMP course (Certified Threat Modelling Professional) I jumped at the chance to formalize my understanding of the topic.

My review of the training materials is going to be very similar to that of CDP:

I took the exam yesterday and it was great, better than I expected!

For anyone looking for tips to take the CTMP exam:

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2023-01-08 19:58:00

Way back when, over ten years ago, Dick had rented some local office space for Unixerius. He used it for storage, I don't think anyone ever did some actual work over there. So, that rental space wasn't long-lived.

After Dick's passing in 2021, I took over running Unixerius in January of 2022. One practical hitch about owning a company which I didn't care for, is having my private home address in the chamber of commerce's registry. That's why I rented a flex-desk at the now defunct Data Center Almere.

Per the start of 2023 I'm now renting an actual office space again, at MAC3Park. They gave me a good deal on a 25m2 room, with eletricity and Internet-access included. And because the previous tenant had left in a hurry there was even some furniture left behind! They were going to toss it all, but I was very happy to have a big desk, decent chair and a comfy sofa!

The only downside to the room was the awfully bad paintjob a previous tenant had done. Dreary grey, with streaks, splotches, grease marks and overspray. I spent the week between Christmas and New Year's redecorating and cleaning. It's now a very, very comfortable office for work and studying!

The Ikea book case used to be in my kid's room and now holds memorabilia to past jobs, teams, colleagues and students.

kilala.nl tags: work,

View or add comments (curr. 0)

2022-12-29 19:27:00

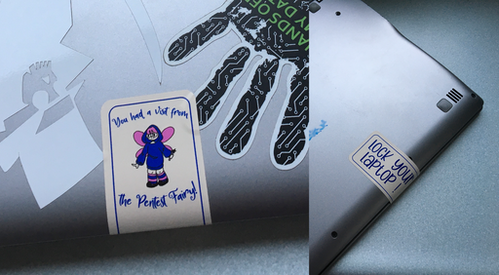

My colleague and I have often wondered about people leaving their laptops unattended and unlocked. We've found them in offices, in restaurants and even lavatories!

This inspired me to do a co-op with my daughter, who took my character design for the #pentest fairy and put her own twist on it. We now have a stack of vinyl stickers (safely removable!) which you can slap on any abandoned hardware.

"You had a visit from the Pentest Fairy! Lock your laptop!"

View or add comments (curr. 0)

2022-07-09 20:52:00

This fall I am scheduled to teach an introductory class on DevSecOps, to my Linux+ students at ITVitae. Ideally, if things work out, this will be a class that I'll teach more frequently! It's not just the cyber-security students who need to learn about DevSecOps, it's just as important (if not more) to the developers and data scientists!

Since this course is going to be hands-on, I'm prepping the tooling to configure a lab environment with students forming small teams of 2-4. I'd hate to manually set up all the Azure DevOps and Azure Portal resources for each group! So, I'm experimenting with azcli, the Azure management command line tool.

Sure, I could probably work even more efficient with Terraform or ARM templates, but I don't have time enough on my hands to learn those from scratch. azcli is close enough to what I know already (shell scripting and JSON parsing), to get the show on the road.

Here's a fun thing that I've learned: every time one of my commands fails, I need to go back and make sure that I didn't forget to stipulate the organization name. :D

For example:

% az devops security group membership add --group-id "vssgp.Uy0xLTktMT....NDk0" --member-id "aad.ODU0MjMyZTAtN...0MmVk"

Value cannot be null.

Parameter name: memberDescriptor

That command was supposed to add one of the student accounts from the external AD, to one of the Azure DevOps teams I'd defined. But it keeps saying that I've left the --member-id as an empty value (which I clearly haven't).

Mulling it over and scrolling through the output for --verbose --debug, I just realized: "Wait, I have to add --org to all the previous commands! I'm forgetting it here!".

And presto:

az devops security group membership add --group-id "vssgp.Uy0xLTktMT....NDk0" --member-id "aad.ODU0MjMyZTAtN...0MmVk" --org "https://dev.azure.com/Unixerius-learning/"

That was it!

kilala.nl tags: work, studies,

View or add comments (curr. 1)

2022-06-29 21:28:00

At the end of 2021 I took the beta version of Comptia's XK0-005, which went live earlier this month as XK1-005. My opinions on the exam still stand: it's a solid exam with a good set of objectives. And luckily I passed. :D

Yesterday, I took part in another beta / pilot: (ISC)2's ELCC, also known as their Entry Level Cybersecurity Certification. I didn't take it to pad my own resumé, I did it to see if ELCC will make a good addition to my student's learning path. So far they've been using Microsoft's MTA Security (which is going away).

(ISC)2, most famously known for their CISSP certification, saw an opportunity in the market for an entry level security certificate. Some would call it a moneygrab... But the outcome of it, is their ELCC.

Looking at the ELCC exam objectives I have to say I like the overall curriculum: the body of knowledge covers most of the enterprise-level infosec knowledge any starter in infosec would need to know. It's very light on the technical stuff and focuses mostly on the business side, which I think is very important!

I've heard less-than-flattering reviews of (ISC)2's online training materials, meaning that I'd steer students to another source. And, having taken the exam, I have to admit that I think it's weak.

Maybe it's because this was a beta exam, but a few topics kept on popping up in questions with the same question and expected-answer being given in slightly different wordings. With 100 questions on the test, I was expecting a bit more diversity.

I also feel that a lot of the questions were about dry regurgitation: you learn definitions and when provided a description, you pick the right term from A, B, C or D. CompTIA's exams take a very different approach, where you're offered situations and varying approaches/solutions to choose from.

Overall take-aways regarding ISC's entry-level cybersecurity certification:

kilala.nl tags: work, studies,

View or add comments (curr. 0)

2022-06-15 06:35:55

Recently I've been thinking back about old computing gear I used to own, or worked on in college. Nostalgia has a tendency to tint things rose, but that's okay. I get pangs of regret for getting rid of all my "antiques" (like the Televideo vt100 terminal, the 8088 IBM clone, my first own computer the Pressario CDS524) but to paraphrase the meme: "Ain't nobody got room fo' all that!"

Still, it was really cool to run RedHat 5 on the Compaq and having the Televideo hang off COM1 to act as extra screen and keyboard.

Anyway... that blog post I linked to, regarding RH5, also mentions OS-9. OS-9 was (is, thanks to NitrOS9). It was an OS ahead of its time, with true multi-user and multi-processing, with realtime processing all on at the time relatively affordable hardware. It had MacOS and Windows beat by at least a decade and Linux was but a glint in the eyes of the future.

I've been doing some learning! In that linked blog post I referred to a non-descript orange "server". Turns out, that's the wrong word to use!

In reality that was a VMEbus "crate" (probably 6U) with space for about 8-10 boards. Yes it used Arcnet to communicate with our workstations, but those also turn out to be VMEbus "crates", but more like development boxen with room for 1-2 boards in a desktop box.

Looking at pictures on the web, it's very likely that the lab ran OS-9 on MVME147 boards that were in each of the crates.

Color me surprised to learn that VMEbus and its successors are still very much in active use, in places like CERN but also in the military! But also in big medical gear, like this teardown of an Afga X-Ray machine shows.

Cool stuff! Now I wanna play with an MC68k box again. :)

kilala.nl tags: work, studies, sysadmin,

View or add comments (curr. 1)

2022-05-08 09:19:00

It's been a very long time in coming, but I finally passed my CKA (Certified Kubernetes Admin) exam yesterday.

When I say "a long time", I mean that this path of studying started back in August 2021 right after finishing teaching group 41 at IT Vitae. Back then, I started out on the Docker learning path at KodeKloud, to get more familiar with containerization in general. I'd considered going for the DCA exam, but comparing it to CKA I reconsidered and added a lot more studytime to just hop onward to Kubernetes.

I can not say enough positive things about KodeKloud. The team has put a lot of effort into making great educational content, as well as solid lab environments. The cost-value comparison for KodeKloud is excellent! I plan on finishing their DCA content later this year, so I can then turn to RedHat's EX180 (Docker/Podman and OpenShift) exam.

Aside from KodeKloud's training materials, the practice exams at Killer.sh were great. You get two free practice exams as part of your CKA exam voucher and I earned a third run by submitting some bug reports.

Again, the value for money at killer.sh is great: in-depth exercises, a stable testing environment and a exam setup that properly prepares you for the online CKA testing environment.

Finally, the actual exam: registration was an okay process, signing in with the proctor went excellent and the exam itself worked fine as well. I did learn that Linux Foundation are very strict about the name put on your registration. I put in "T.F. Sluijter-Stek" because legally that is my identity, but they actually wanted "${FirstName} ${LastName}" so for me my "${DeadName} ${MaidenName}". Oh well; no biggy. The proctor was very patient while I went and updated my name on the portal.

So to summarize:

kilala.nl tags: studies, work,

View or add comments (curr. 0)

2022-04-18 15:52:00

For those who just want the answer to the question: "How do I upgrade a Windows Server DataCenter Evaluation edition to a licensed Windows Server Standard?", here's where I got my answer. I'll provide a summary at the bottom.

---

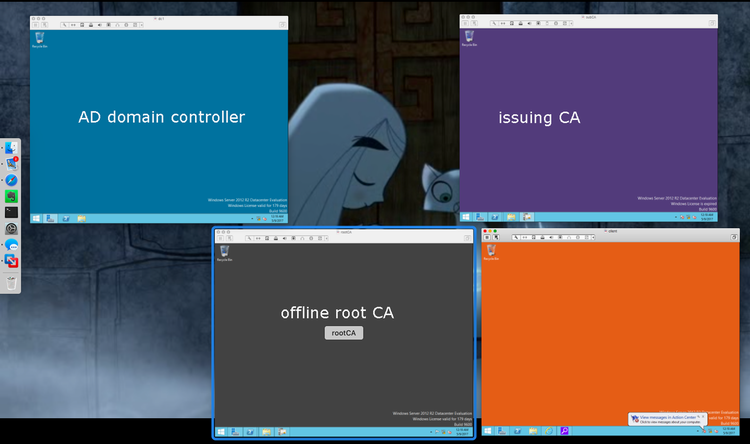

My homelab setup has a handful of Windows Server systems, running Active Directory and my ADCS PKI system. Because the lab was always meant to just mess around and learn, I installed using evaluation versions of Windows Server.

I kept re-arming the trial license every 180 days until it ran out (slmgr /rearm, as per this article). After the max amount of renewals was reached, I re-installed and migrated the systems from Win2012 to Win2019 and continued the strategy of re-arming.

Per this year, I decided to spring for a Microsoft Partner ActionPack.

Signing up Unixerius for the partnership took a bit of fiddling and quite some patience. Getting the ActionPack itself was a simple as transferring the €400 fee to Microsoft and away I go!

The amount of licenses and resources you get for that money is ridiculously awesome. Among the big stack of coolness, for my homelab, it includes ten Windows Server 2019 and 2022 licenses. There's also great Azure and MS365 resources, which I'm definitely putting to good use; it's a great learning experience!

---

Upon inspection of my homelab, it turns out that most of my Windows VM were installed as "Windows Server DataCenter Evaluation", simply because I wasn't aware of the difference between the Standard and DataCenter editions. Now I am. :)